Semi Stocks Had Their Biggest Month This Year

Computex 2025, Saudi Deals, China Trade Hiatus & Nvidia’s Pedal To The Metal (With Updated Price Targets)

At The Pragmatic Optimist, we help investors navigate the evolving AI innovation landscape, identify rock-solid businesses with strong growth trajectories and operational grit, and make long-term investments in the space with a high probability of success.

Become a paid subscriber today

🎥Let’s Set The Stage

Sell in May and go away?

So far, semiconductor stocks have swung in the exact opposite direction versus conventional wisdom, with the many stocks in the semiconductor cohort delivering alpha this month. The big percent move up this month means semiconductors have scaled almost all the brick walls (headwinds) that they crashed into, such as DeepSeek fears and mounting concerns over tariffs.

What changed this month? 🤔🤔🤔

The month of May has been kind to the AI trade with three main catalysts that have strong potential to lift semiconductors and AI stocks out of muddy waters.

It all started with some signs of global trade possibly resuming back to normal with a 90-day tariff hiatus announced between China and the US. Then, the Trump 2.0 administration’s visit to the Middle East opened doors to big flashy deals for Nvidia NVDA 0.00%↑, AMD AMD 0.00%↑ & other American semiconductor vendors. On the heels of the Middle East deals was more reassuring news from the US DoC (Department of Commerce) that the AI Diffusion rule would be rescinded.

And finally, as recently as this week, Nvidia used this week’s annual Computex 2025 event in Taiwan to announce that it is opening up its AI ecosystem to a multitude of semiconductor partners & vendors that even includes custom silicon players such as Marvell MRVL 0.00%↑, Astera Labs ALAB 0.00%↑, etc.

Since these are a broad range of catalysts for investors to quickly digest, we decided to do a deep dive on how these catalysts may impact the semiconductor cohort of stocks moving forward.

Let’s start with Computex 2025.

Computex 2025: Nvidia’s AI Ecosystem Gets Wider

2025’s Computex event was always going to be about Nvidia, especially after Computex 2024 officially catapulted Jensen Huang into a rock star 😉.

Computex 2025 is still in progress in Taipei, Taiwan, so Huang might still relive more of those rockstar moments from last year. But so far, Nvidia’s biggest announcement this week, in our view, has been to finally open up its AI ecosystem to a carefully selected roster of semiconductor partners, peers, and vendors.

Nvidia announced that it is opening up access to its proprietary NVLink protocol by releasing the NVLink Fusion, a brand-new IP package that allows hyperscalers, neoclouds, and other deep-pocketed clients to use the company’s key NVLink technology for their own custom rack-scale designs.

A quick recap on NVLink: it is Nvidia’s own proprietary interconnect fabric that allows for Nvidia GPUs like Hopper, Blackwell, Rubin, etc. to communicate with one another as well as allowing for Nvidia GPUs to talk to Nvidia’s CPUs like Grace, Vera, etc. Nvidia’s GPUs use the NVLink interconnect to access each other’s memory directly while sharing other resources, allowing for easy scaling up or scaling down of resources depending on the AI workload.

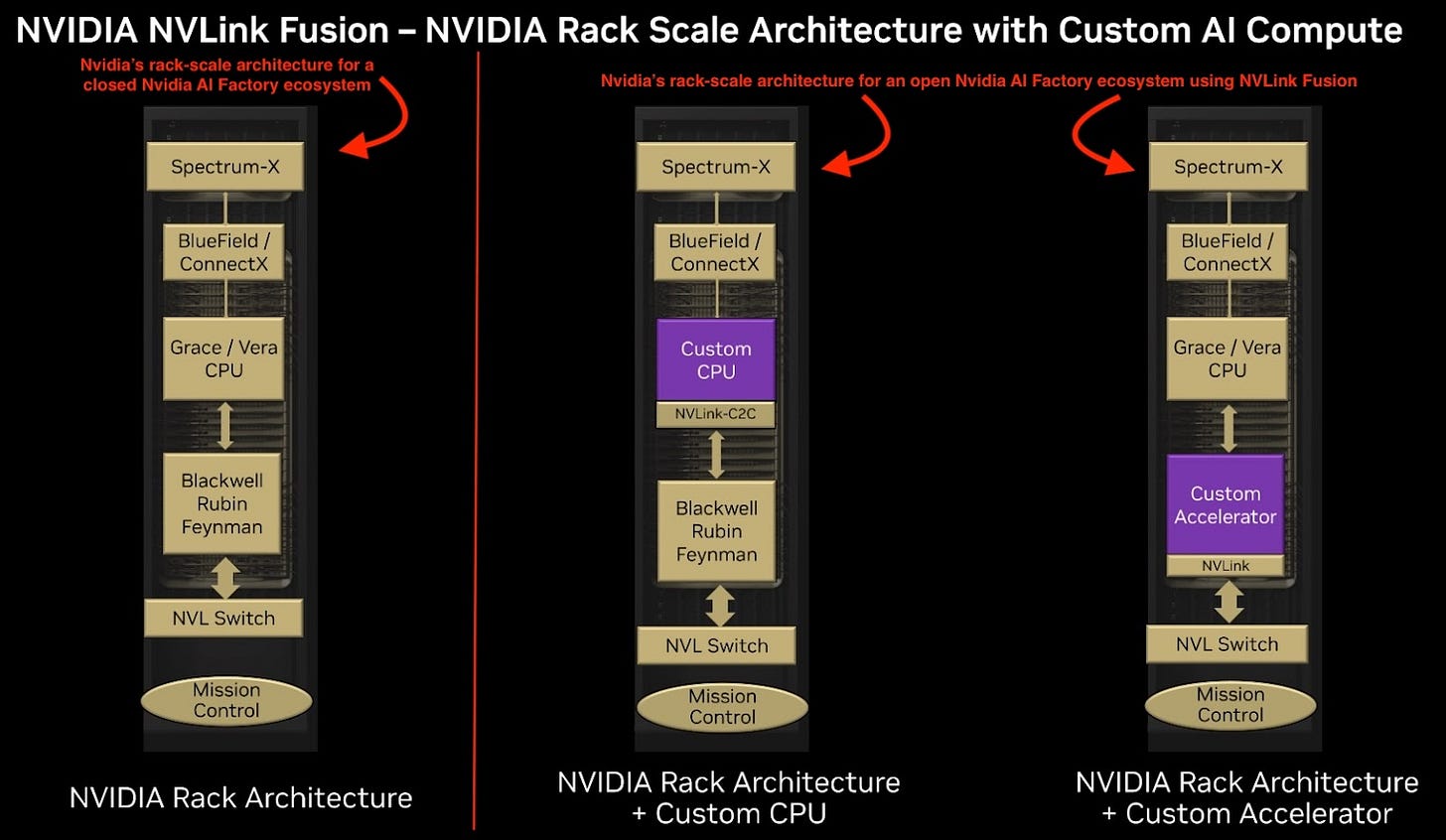

This was the interconnect technology used in scale-up networks deployed at AI data centers, as seen on the left-hand side of the chart below.

But with NVLink Fusion, Nvidia generously opened up its rack architecture ecosystem to semiconductor vendors that often build peer products competing with Nvidia. To help get things started, Nvidia has already onboarded a large roster of partners.

As pointed out in Nvidia’s technical blog, there are only two approved use cases when utilizing NVLink Fusion (also shown in Exhibit B above):

The first approved use case is connecting custom CPUs to Nvidia’s GPUs (Blackwell or above). This means Nvidia’s Grace CPUs would be replaced with Custom CPUs that would interconnect to Nvidia GPUs via the short-range NVLink C2C (chip-to-chip) interconnect silicon chiplets. (A little bit on Nvidia C2C after we discuss the second approved use case)

For now, Qualcomm QCOM 0.00%↑ and Fujitsu are the only two vendors officially onboarded onto Nvidia’s custom CPU program, while Marvell, Astera Labs, Alchip & MediaTek have been officially brought in as AI Connectivity partners to license NVLink for interconnectivity solutions.

The second approved use case is ‘allowing hyperscalers’ to use their own custom accelerators or ASICs or XPUs instead of Nvidia’s GPUs in the rack to talk to Nvidia’s CPU (Grace and above). Based on verbiage in Nvidia’s tech blog, the interconnectivity between Nvidia’s CPU and the XPU can be achieved either by integrating NVLink IP and ports into the design of the XPU or via an interconnect chiplet packaged alongside a supported XPU, per The Register.

For Nvidia’s interconnect chiplets, AI Connectivity partners we listed earlier can be tapped for licensing and manufacturing.

But if an XPU was being designed ground up for the NVLink Fusion-approved server rack, then only approved vendors such as Marvell, Alchip, etc. could make those XPUs. And for design purposes, semiconductor IP from Cadence CDNS 0.00%↑ or Synopsys SNPS 0.00%↑ can be utilized.

For use case #2, The Register also adds:

“In theory, this should open the door to superchip-style compute assemblies that feature any combination of CPUs and GPUs from the likes of Nvidia, AMD, Intel, and others, but only so long as Nv is involved.

You couldn't, for example, connect an Intel CPU to an AMD GPU using NVLink Fusion. Nvidia isn't opening the interconnect standard entirely, and if you want to use its interconnect with your ASIC, then you'll be using its CPU, or vice versa.”

In the keynote, Huang also seemed to ‘allude’ to a theoretical scenario where any XPU, including vendors like Broadcom AVGO 0.00%↑ or merchant GPUs like AMD’s MI300x, can be fit in use case #2.

Here is an excerpt from Huang’s presentation aimed directly at hyperscalers:

“So, your AI infrastructure could have some Nvidia, a lot of yours. A lot of yours, a lot of CPUs, a lot of ASICs and maybe a lot of Nvidia GPUs as well. And so, in any case, you have the benefit of using the NVLink infrastructure and the NVLink ecosystem, and its connected perfectly to Spectrum-X.”

But we think it's highly unlikely that Nvidia will allow companies like Broadcom and AMD to be a part of this expanded NVLink Fusion ecosystem. That’s also a major reason why these companies were inconspicuously left out from the announcement and partner list.

We think Nvidia played a smart move by selectively opening up its ecosystem with a restricted set of vendors, especially on the XPU and the connectivity solutions front. NVLink Fusion is Nvidia’s way of showing it is opening up its walled garden of its “AI factory” vision, yet, at the same time, further protecting itself against the growing might of the UALink consortium. Broadcom and AMD are large sponsors of the UALink consortium that is making large strides in launching highly competitive networking technology standards.

Nvidia had probably planned for this situation all along, which is why they announced the first and earliest attempt to open up NVLink at GTC 2022. At GTC 2022, Nvidia revealed C2C to be their proprietary interconnect solution that is a high-bandwidth, low-power, low-latency connection between CPUs and GPUs key to accelerating AI workloads.

With HPC (high performance computing) GPU sales possibly slowing down relative to Nvidia’s own historically astronomical growth rates, along with the AI Connectivity market speeding up, Nvidia believes the time is right to open up its AI ecosystem and attack a fast-growing AI Connectivity market.

We recently wrote an extensive primer post about the AI Connectivity market where we also talked about Nvidia’s networking revenues and how the networking market is primed to grow faster over the next couple of years. The way we see it, by opening its ecosystem with NVLink Fusion, it could give Nvidia’s networking revenues a much-needed boost.

Still, the larger question investors would have is how does Nvidia plan to support growth rates of its AI & HPC GPUs? Is it by addressing another fast-growing market for GPUs with neoclouds?

Computex 2025: Nvidia’s GPUs Found A Way Around Hyperscaler Demand

Turning our attention to Neoclouds, it includes companies such as CoreWeave CRWV 0.00%↑ and Nebius NBIS 0.00%↑ which are at the forefront of a rapidly growing corner of the cloud market.

Earlier this month, Synergy Research published their findings that AI helped the “cloud market growth rate jump to almost 25% in Q1” this year. What they also found is that CoreWeave, the largest neocloud vendor by revenues, is “on the verge of breaking into the top twelve ranking of cloud providers, thanks to its AI and GPU services.”

We just heard from Nebius this week, and their Q1 earnings revealed that while sales grew almost 400% to $55.3M, the European neocloud company was rapidly increasing capex to add more GPU capacity.

Meanwhile, peers such as Crusoe and CoreWeave are guzzling up large volumes of debt to stay ahead of the demand curve for GPU capacity. CoreWeave just announced that they will be spending at least $20B on AI infrastructure and data center capacity (mostly Nvidia GPUs) “to meet booming demand from clients, including Microsoft.”

So it made complete sense why Nvidia used Computex 2025 to announce DGX Cloud Lepton, Nvidia’s cloud service marketplace linking AI developers in need of on-demand GPUs with cloud service providers who have real-time GPU capacity.

Alexis Bjorlin, VP of DGX Cloud at Nvidia, told The Register that Lepton was more like “a ridesharing app, but rather than connecting riders to drivers, it connects developers to GPUs.”

Alexis’s comment means, with Lepton, Nvidia becomes some sort of an official aggregator collecting economic rent as the gatekeeper of this marketplace, while it directly connects AI developers (the demand side of the equation) to GPU cloud vendors (the supply). CoreWeave, Nebius, Crusoe, Lambda, etc., are all GPU cloud vendors signed up by Nvidia as part of Lepton’s early access program.

Also, since this is part of the DGX Cloud ecosystem, Nvidia will also have multiple opportunities to push its software stack, which includes NIM (Nvidia Inference Microservices) & NeMo microservices, Serverless Inference services etc.

What this does is give Nvidia stronger legs to push its product suite of GPUs, systems, and services in the cloud market, which, according to Synergy Research, generated ~$380B in annualized revenues last quarter and is posting very healthy double-digit growth rates.

Is the Middle East Taking China’s Place As AI Customers?

If this week was about Computex, the week before was about the ME region becoming the new hot market for America’s semiconductor companies.

Last week started off with the US and China announcing a hiatus to their trade war, which, for now, gave markets a big sigh of relief. Why? Because per the truce period, the US would cut tariffs on Chinese goods from 145% to 30%, far more than the 80% tariff that Trump 2.0 had implied in a separate post on social media. Also, under the terms of the deal, China would reduce tariffs on American imports from 125% to 10%.

Obviously, this is not over, and we believe markets will get to witness the meat of the progress in the US/China trade negotiations only towards the end of the 90-day hiatus period, which will be some time in the July-August time frame this year.

At the moment, the focus has moved very quickly from the US/China trade negotiations to rapid-fire rounds of deal-making that emerged from the ME region that saw Nvidia and AMD strike successive deals with Humain, a ME AI startup backed by Saudi Arabia’s PIF.

According to Nvidia+Humain’s press release, Humain will be building AI Factories in the ME region with a projected capacity of up to 500 MW. They plan to achieve that by deploying Nvidia’s GPU server systems over the next 5 years, but more importantly, Humain is looking at deploying “18,000 NVIDIA GB300 Grace Blackwell AI supercomputer(s) with NVIDIA InfiniBand networking” in the first phase itself.

We think this is highly consequential for Nvidia.

If we assume this analyst’s assumption that each Blackwell server system is priced at $3-4M, our estimates suggest that Nvidia’s total Data Center end market revenue base of $115B would get a ~$63B boost in revenues just from Humain’s first phase of GPU deployments. We will get to hear about how Huang & team think about these deals from the ME region in next week’s earnings call, but we’re quite sure it will be positive.

The ME deals, along with the fact that the US DoC rescinded the Biden-era AI Diffusion rule, mean Nvidia gets another chance to revive its Sovereign AI vision. The last update from Nvidia’s CFO was last year when she said that Sovereign AI was expected to bring in at least $10B in revenues in 2024.

The way we see it, Sovereign AI is going to be critical for Nvidia to keep its ex-US revenues growing rapidly, especially since Huang revealed this week that Nvidia’s market share in China’s AI chip market was sliced down to 50% from 95%.

Meanwhile, Nvidia’s peer, AMD, is also going to benefit from Humain’s investments in the region, but with fewer details in AMD’s announcement as compared to Nvidia’s release, it was difficult to ascertain the exact benefit AMD would see in its revenues. AMD also had a more muted Computex presence as compared to its larger GPU peer, but we expect more details to be announced at AMD’s flagship event, Advancing AI, next month, where it is widely believed that the MI350 GPU, AMD’s next iteration of the MI300X GPU systems, will be launched.

AMD is also making strides in quickly transforming its GPU business towards Nvidia’s rack-scale system model by fully absorbing ZT Systems staff from a previous acquisition. Popular blog SemiAnalysis believes AMD will finally be uniquely positioned to fully take on Nvidia’s dominance in GPU server systems by next year.

Below is our update on our price targets for semiconductor companies covered in this post. Along with the price targets, we’ve also put a list of key events that we will be watching over the next month, which we believe may alter the course of the semiconductor landscape.