NVIDIA’s chips are the tastiest AI can find. It’s stock still has ways to go.

Nvidia has always been at the right place at the right time leading all the major innovation cycles over the past 2 decades. It is now getting ready to power the next industrial revolution, led by AI.

«The 2-minute version»

Nvidia is now bigger than Amazon and Tesla combined. With a $2.8T market cap, it is closing in on Apple and Microsoft. With a glorious earnings performance, where revenue and earnings grew 262% and 690% YoY respectively, Nvidia’s chips are indeed the tastiest AI can find.

Right place at the right time: If there is one thing that is probably quite clear by now, it is that Jensen Huang is a master visionary. Think about it. From graphics rendering, gaming and media, cloud computing and crypto, Nvidia’s chips have led the way in each of these past innovation cycles as it saw its GPU applications expand over the last 2 decades. And now it is getting ready to advance the next industrial revolution, that will be powered by AI.

Globalization played a key role: In case you are wondering about what happened to the once indomitable Intel, Nvidia shrewdly stole its crown when it forged partnership with nanometer-focused leading edge chip foundries like TSMC to manufacture its chips leading to faster innovation and higher markup.

Welcome to the age of accelerated computing: With companies and countries partnering with Nvidia to build a new type of data center, which Jensen Huang calls “AI factories”, Nvidia is positioned to power the AI boom, as it races to deliver the infrastructure for the next generation of AI applications. For instance, it is Nvidia’s latest H200 chips that powered OpenAI’s GPT-4o demo, which demonstrated a fundamental shift in human-computer interaction.

No slowdown in sight, just yet: With H200 and Blackwell-based chips in production, the management indicated that demand is ahead of supply well into next year. Plus, the management is particularly bullish on autonomous driving and consumer internet companies, as Meta Platforms and Tesla have already projected strong double digit growth in increasing capacity in their data centers and AI infrastructure.

Should you buy? While we are not investors and won’t be initiating a position at the moment, we believe that Nvidia will be a long-term beneficiary of accelerated computing, where LLMs become a prevalent part of workloads with the rise of copilots, digital assistants and more. Plus, the surging inference revenue could possibly shield Nvidia from large scale cyclicality that semiconductor companies are often exposed to.

Chances are high for Nvidia’s NVDA 0.00%↑ name to come up if you recently engaged in a conversation with your friends, family, or co-workers about AI or investing in the stock market.

Yet another quarter goes by as Nvidia, the maker of specialized semiconductor chips that power most of the world’s data centers and computers, continues to defy the laws of gravity.

If you think you missed out on Nvidia’s rally, you’re not alone. Even large enterprises that couldn’t see the coming AI boom were forced to re-evaluate their decisions, leading to a mad rush for Nvidia’s chips. This led to record business for Nvidia, with the Santa Clara, CA-based company posting its fourth consecutive quarter of growing its sales by more than 2x.

Naturally, the Pragmatic Optimists in us decided to take a good long look at Nvidia, breaking down its business, understanding the root cause behind the company’s hype, evaluating the health of Nvidia as a company, and arriving at our estimates for any potential further upside in the company’s shares. [Hint: We think there still may be 14-16% upside left from its current levels to a price target of $1200+ 😉]

Somehow, Nvidia has always managed to be at the right place at the right time

The first time I learned about Nvidia was in 2005, when my PC conveniently froze just as I began to play the latest FIFA Soccer video game. My father thought it was a computer virus that I loaded from the FIFA Soccer game, so he urgently called for his computer technician to take a look at it. Unlike me, my dad needed the PC to do get some meaningful work done, where he used Adobe Photoshop for most of his media work.

After a quick inspection, our technician suggested this new Graphic Processing Unit chip (GPU) from a company called Nvidia that would ‘accelerate’ the processing power of the computer and address both our needs—my father’s need for working on media projects and my ‘need for working on’ (ahem) gaming projects.

As part of my course curriculum at the time, I had just begun studying about computers, so naturally, my curiosity got the better of me, and I asked him why Intel’s central processing units (CPUs) were not enough for our computer to support our gaming and media application software. The technician’s response was swift. If Intel’s processors were the brains computers needed to run, Nvidia’s GPUs acted as the computer's eyes and muscles, allowing it to do many more things at once.

Here’s how the hosts of the former hit television series MythBusters, Adam Savage & Jamie Hyneman, cleverly illustrate the difference between Intel’s CPUs and Nvidia’s GPUs using paintballs as an analogy.

What was oblivious to me as a kid around that time was that the world’s computing needs were rapidly evolving from just basic document processing such as Word, Excel, etc., to more multimedia-related tasks such as movies, games, etc., and the GPUs that Jensen Huang’s Nvidia invented were right there to capture the new market—at the right place, at the right time.

In that decade, between 2000 and 2010, the world successfully emerged from the dot-com bust and 2008’s GFC crisis as more websites popped up online. The proliferation of games and multimedia applications supplemented the demand for Nvidia’s GPUs, but Jensen Huang already had other plans.

By 2011, Nvidia had already begun to reposition the company’s strategy for its GPU chips towards mobile computing as Apple supplanted Blackberry as the face of the smartphone revolution. In addition, the concept of cloud computing, cryptomining and data centers had started to take shape, forming the basis for the demand that Nvidia is seeing today. Once again, Jensen Huang’s Nvidia was in the right place at the right time.

☕🧁Speaking of the “right place at the right time”, we could provide more of these timely company investment briefs in your inbox. But in order to do so, we would need a fair bit of caffeine and an occasional treat. So, if you choose to support our work by buying us a coffee and a muffin for $8/month, we would be forever grateful.

Nvidia’s path to AI domination and a cloud computing powerhouse was decades in the making

Another example of how Nvidia was at the right place at the right time was the grounded relationships that Nvidia built with academics and researchers. Andrew Ng, then a Stanford researcher, argued the case for Nvidia's GPUs in AI via this paper in 2008. In the paper, he said:

“Modern graphics processors far surpass the computational capabilities of multicore CPUs, and have the potential to revolutionize the applicability of deep unsupervised learning methods.”

What Ng meant to say was that, using just two NVIDIA GeForce GTX 280 GPUs, his three-person team achieved a 70x speedup over CPUs, processing an AI model with 100 million parameters. According to the paper, training an AI model with 100 million parameters at the time would have taken several weeks, but Ng was able to train his AI model in just one day using two Nvidia GPUs. The findings in Ng’s paper were further backed up by Geoffrey Hinton, one of the Godfathers of AI, who later revealed in an interview:

“In 2009, I remember giving a talk at NIPS [now NeurIPS] where I told about 1,000 researchers they should all buy GPUs because GPUs are going to be the future of machine learning,”

Here is an excerpt from Ng’s presentation during Nvidia’s GTC Conference in 2015, where he uses his famous space-rocket analogy to explain why he believed deep learning, ML, and AI would be the next big wave the computing industry would experience.

Between 2011 and 2015, Ng had been working as the Chief Scientist at many large technology firms, Google included, where he deployed similar data center architectures based on Nvidia’s GPUs.

Around the same time, Nvidia once again reorganized their business segments to reorient the company to better align themselves with the wave of computing demand that a growing number of industry experts & AI enthusiasts had begun calling for. Gaming, media, and visualization were still big markets for Nvidia, but the company was already looking ahead.

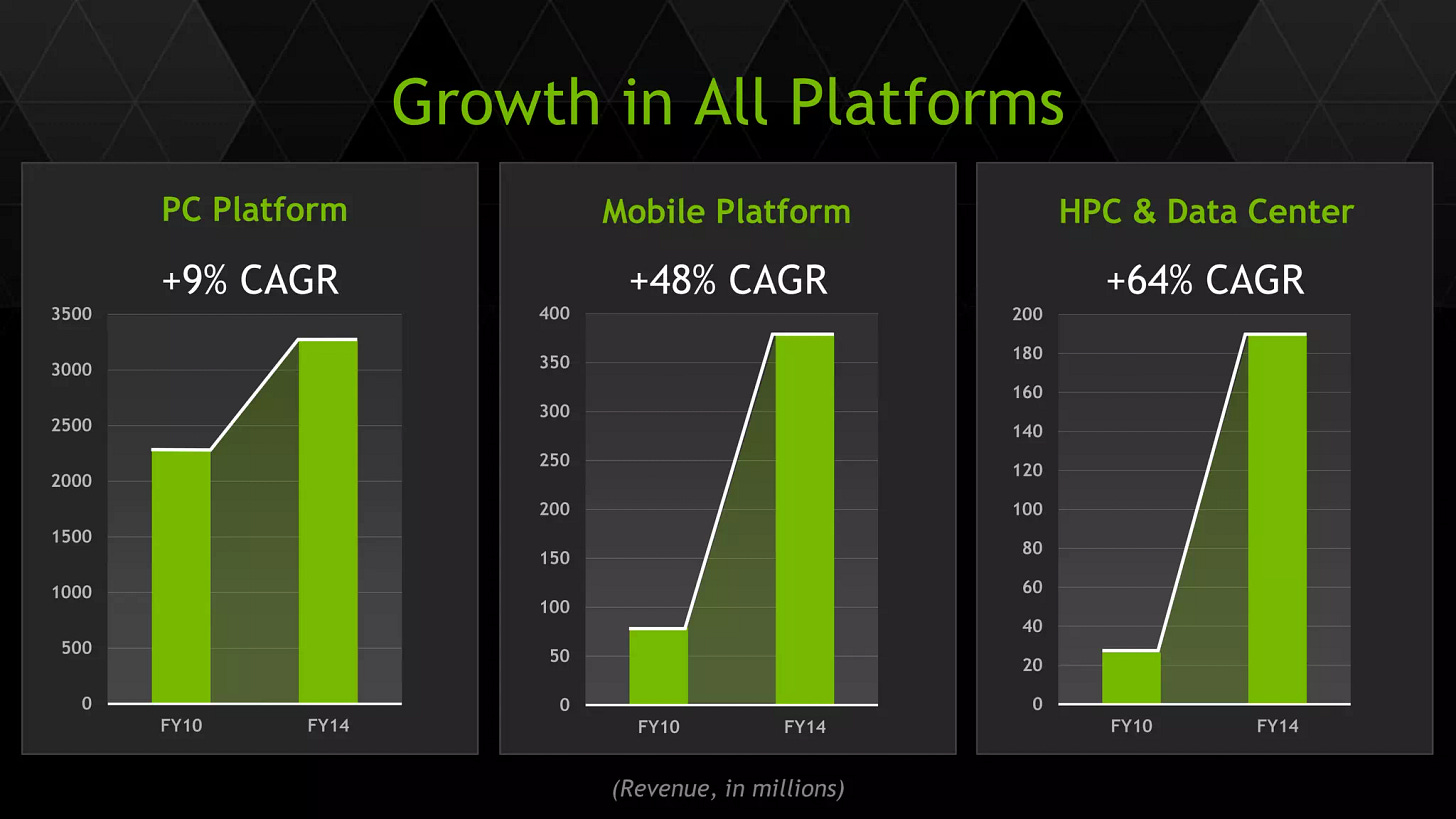

Here is a slide from their 2014 presentation to investors that showcased the strength in their Data Center and HPC (high-performance computing) that grew at a compounded growth rate of 64% between 2010 and 2014, far outpacing the growth pace of Nvidia’s other segments.

This period between 2010 and 2014 was one of the transitionally-defining moments that set Nvidia on the course to dominate AI as we see it today. Over time, one can note how these re-organizational strategies proved Nvidia’s Huang was correct in anticipating forward trends as observed in Nvidia’s revenue segments over the last decade.

Fun fact: 😹 The period between 2010 and 2014 was also the time when Nvidia’s Huang started cultivating his trademark leather jacket look, which his admirers have come to love.

Globalization, the other tailwind that shaped Nvidia’s manufacturing efficiency

Anyone who has anything to do with semiconductors knows that there are two basic ways of manufacturing chips at scale.

Intel INTC 0.00%↑ is one of the most popular examples of a semiconductor fabrication or fab company that designs and manufactures its own chips. But manufacturing chips can be hard and expensive. In the words of

’s post that explains this business, "this is partially due to the increasing scale and absolute difficulty of making a semiconductor at the leading edge."The “leading edge” that Fabricated Knowledge talks about is process nodes, a key measure for components that go into a chip, measured in nanometers. Basically, the lower the nanometer number, the more components that can be added to a chip and the more processing power. Today, it is just chip foundries such as Taiwan’s TSMC TSM 0.00%↑ and South Korea’s Samsung that are able to maintain that leading edge, as shown in the graph below.

What does this have to do with Nvidia?

The nanometer-focused leading edge that chip foundries like TSMC were able to maintain allowed chip companies such as Nvidia and AMD AMD 0.00%↑ to design and produce relatively powerful chips at a quicker pace, leaving Intel far behind.

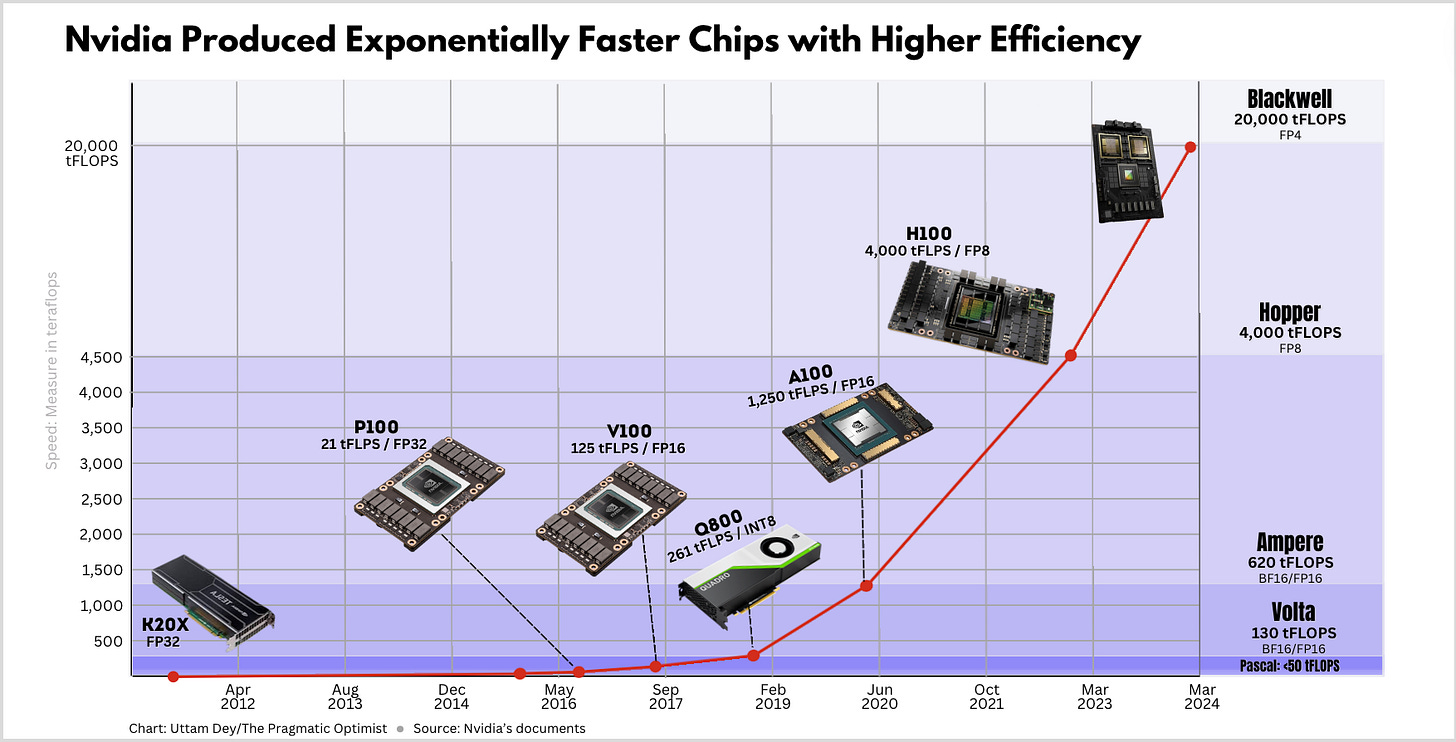

In the semiconductor world, Nvidia and AMD are called fabless chip companies because they design their own chips, but chip foundries such as TSMC take their chip designs and manufacture the chips for them, which Nvidia and AMD eventually sell for a higher markup. The chart below shows how Nvidia got exponentially better at designing faster chips (as measured in teraflops or tFlops) which were manufactured by TSMC.

Over time, the manufacturing efficiency that Nvidia unlocked because of its partnership with TSMC, in addition to the superior chips that Nvidia developed, helped it gain an incredible hold over the market, as seen in the graph below.

Yet again, Nvidia must reposition itself for the future

If you haven’t noticed already, go back to the previous chart, and you’ll see the chart also includes projected market share for the next few years. Of course, these are all projections and estimations and must be taken with a grain of salt, but they do indicate that 2024 could be the first year that Huang’s Nvidia could cede some market share to AMD, another major chip company, expertly run by Huang’s cousin, Lisa Su. Intel is also expected to take back a minimal percent of the market share. How is that possible?

Both AMD and Intel have launched their own AI chip products for the datacenter, aimed at stealing back share from Nvidia’s H100/H200 datacenter-focused chips. While Intel launched their Gaudi3 AI Accelerator chip, AMD launched their own MI300-series around the same time as Intel, with plans to add more details in an upcoming June event. Based on the orders and customer base of AMD & Intel, market analysts believe there will be a low single-digit dent in Nvidia’s market share this year, as noted in the previous market share chart.

But Nvidia’s Huang is already looking ahead. He believes that the company must now turn its attention to the next leg of AI—Model Inference. Most of the world’s most popular models, led by OpenAI’s GPT, Meta Platform’s Llama, and Anthropic’s Claude, have spent their time so far getting trained and learning from data. Now the next stage in AI will be Model Inference, where trained models will be tasked with constantly inferring new data from devices and providing predictions based on the best possible outcomes. All these Model Training and Inferencing stages are expected to continue in data centers, as well as AI Factories, a new data center concept that Huang toyed with in March.

Here again, Fabricated Knowledge’s niche post on Substack goes deeper into explaining Training vs. Inference. We’ve also borrowed and slightly modified a chart from their post down below to explain Model Training vs Inference.

Naturally, this opens up newer markets for Nvidia to pursue while still maintaining their grip on the wider AI datacenter market. Some industry experts believe that 20% of the demand for AI chips next year will be due to model inference needs, with “Nvidia deriving about 40% of its data center revenue just from inference.”

Nvidia’s executives also believe the company can benefit from demand from specific industry verticals. Nvidia’s CFO, Colette Kress, said she expects “automotive to be our largest enterprise vertical within the Data Center this year, driving a multibillion revenue opportunity.” In an interview last week with Yahoo Finance, Nvidia’s Huang believes, “Tesla is far ahead in self-driving cars.” He also said:

"It [Tesla's full self-driving model] learns from watching videos—surround video—and it learns about how to drive end-to-end, and using generative AI, predict the path and how to understand and how to steer the car.”

This was a reference to the Video Transformers that Tesla uses to train its FSD model built on Tesla’s H100 GPU’s cluster, which at last count had 35,000 Nvidia H100 GPUs. Companies like Tesla, Meta Platforms, etc. have been significantly ramping up their purchases of Nvidia’s chips and continue to expect to do so over the next year. Here is a chart of what that looks like so far until 2023.

In recent calls with investors, the executives of Meta Platforms and Tesla have already projected strong double-digit growth in the investments they are planning to make in increasing the capacity of their data centers and AI infrastructure. As long as these large technology firms continue to spend on upgrading their infrastructure, there certainly does not seem to be any indication of a deep slowdown in Nvidia’s revenue.

To us, this definitely looks like Nvidia is yet again—at the right place, at the right time—well positioned for future growth. Automotive AI & Sovereign AI are two future areas of growth, while enterprises across the technology and consumer internet industries continue to spend on their data centers for their model training and inferencing needs.

Plus, Nvidia recently launched their new Blackwell platform in March this year that has powerful implications for AI workloads and can reportedly help deliver discoveries across all types of scientific computing applications, including newer areas such as Quantum Computing and Drug Discovery.

🥹Before we get into assessing Nvidia’s valuation, please know we are not Nvidia investors at the moment. So, if you want to console us for missing out on the monster rally, please consider buying us a coffee and a muffin and it will really help boost our morale. 🥹

Nvidia’s stock still has value despite its explosive growth

Over the past decade or so, Nvidia has grown its total sales by compounded growth rates of ~33%, as seen in the chart below. During that time, the company’s revenue growth rates rose as high as 50–60% during the cyclical highs, while normalizing in the mid-teens level growth rate levels during cyclical lows.

At the Pragmatic Optimist, we still believe there is enough ramp for the company to almost double its revenue again in 2024, given the massive demand for Nvidia’s chips. However, beyond that, we expect some level of normalization, as illustrated below in the mid-teens range. That means, between 2023 and 2026, Nvidia’s sales should be growing at a compounded annual growth rate of 43–45%.

In terms of profitability, the company has gotten so good at optimizing its chip business that it now boasts a 54% operating profit margin, per its recent annual filings. Over the next 3 years, we expect operating profit to grow in line with revenue growth, with operating profit margins remaining relatively flat in 2025 and 2026. When discounting future cash flows from Nvidia, we have used a 10% discount rate, consistent with the stock’s beta levels, cost of equity, and cost of debt.

Based on the growth rates in its profits that we noted and the total shares outstanding of ~2.6 billion shares, we believe the company warrants an earnings multiple of ~34x forward earnings. That implies at least 14–16% upside in Nvidia’s stock from current levels to a price target of $1250.

In closing…

Granted that potential returns that we estimated for Nvidia’s stock seems marginal given the monstrous growth that Nvidia has already demonstrated. But this appears to be that time of the decade when Huang is resetting the course for Nvidia again to enter new markets aided by the boost in efficiency that Nvidia’s Blackwell platform should provide.

Like we said earlier, we do not hold positions in Nvidia at the moment, But had we owned shares, I believe we would taken a similar course of action as

outlines in his note below.That’s all for today. What are you doing with your Nvidia position? Are you buying, holding or selling? Please let us know in the comments section below.

DISCLAIMER: This is solely my opinion based on my observations and interpretations of events, based on published facts and filings, and should not be construed as personal investment advice. (Because it isn’t!)

Wow, I enjoyed reading that. For layperson without any financial or technical background. You write so clearly and on point.

Great advice, as always. CNBC or Bloomberg TV would be lucky to have you as a show host.